Understanding what the meaning of a crawl budget is and how to optimize it for SEO purposes is important if you want to have full control of what is going on with your website.

It’s a highly technical subject and that’s why most webmasters tend to avoid it, but it doesn’t have to be this way.

In this guide, you’ll learn in simple language, what is a crawl budget (and related terms like crawl rate, crawl stats, etc.), how it affects SEO and what actions you can take to optimize it.

- What Is Crawl Budget?

- Why Is Crawl Budget Important for SEO?

- How to Optimize Crawl Budget for SEO?

- How to Check and Interpret your Crawl Stats Report?

What is Crawl Budget?

Crawl budget is not a single number but a general term that describes how often and how many pages Google crawls and indexes from a particular website over a given period of time.

Factors affecting crawl budget are website and navigation structure, duplicate content (within the site), soft 404 errors, low-value pages, website speed and hacking issues.

Why Is Crawl Budget Important for SEO?

It should be emphasized from the beginning that crawling is not a ranking signal.

This means that crawling does not directly impact the position a page will appear in organic search results.

But crawling and crawl budgets are important for SEO because:

- If a page is not indexed by search engines, it won’t appear for ANY searches

- If a website has a lot of pages, Google may not index them all (that’s why crawl budget optimization is necessary – more on this below)

- Changes made to a page may not appear as fast as they should in the search results.

What is crawl budget optimization?

Crawl budget optimization is the process of checking and making sure that search engines can crawl and index all important pages of your site on time.

Crawl budget optimization is not usually an issue with small websites but it’s more important for big websites that have thousands of URLs.

Nevertheless, as you will read below, the way to optimize your crawl budget is by following SEO best practices and this has a positive effect on your rankings too.

How to Optimize Your Crawl Budget for SEO

Follow the 10 tips below to optimize the crawl budget for SEO.

- Provide for a hierarchical website structure

- Optimize Internal linking

- Improve your Website speed

- Solve duplicate content issues

- Get rid of thin content

- Fix Soft 404 errors

- Fix Crawl errors

- Avoid having too many redirects

- Make sure that you have no hacked pages

- Improve your website’s reputation (External links)

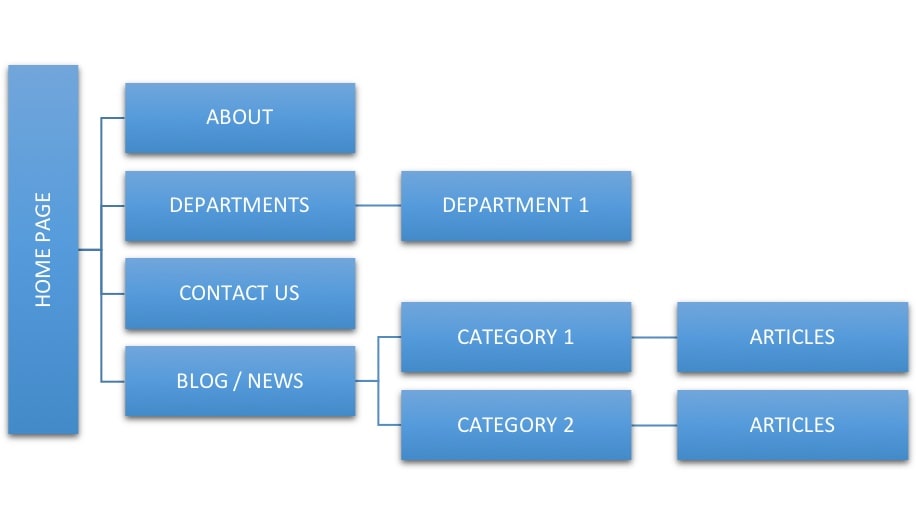

1. Provide for a hierarchical website structure

When search engine crawlers visit a site, they start from the homepage and then follow any links to discover, crawl and index all website pages.

Having a hierarchical site structure with no more than 3 levels deep is the ideal structure of any kind of website.

This means that any URL should be accessible from the homepage in three clicks or less.

This simple structure makes crawling easier and faster and it’s good for the users too.

2. Optimize Internal linking

For any type of website, search engines like to give more priority (when it comes to crawling and indexing) to the most important pages of a site.

One of the ways they use to identify the important pages of a site is the number of external and internal links a webpage has.

External links are more important but harder to get, while it’ easy for any webmaster to optimize their internal links.

Optimizing internal links in a way that it helps crawl budget means:

- Making sure that the most valuable pages of your site have the greatest number of internal links.

- All your important pages are linked to from the homepage

- All pages of your site have at least one internal link pointing to them.

Having pages on your site that have no internal or external links (also called ‘orphan pages’) makes the job of search engine bots more difficult and they waste your crawl budget.

Internal linking SEO best practices – A comprehensive guide on how to optimize your internal link structure.

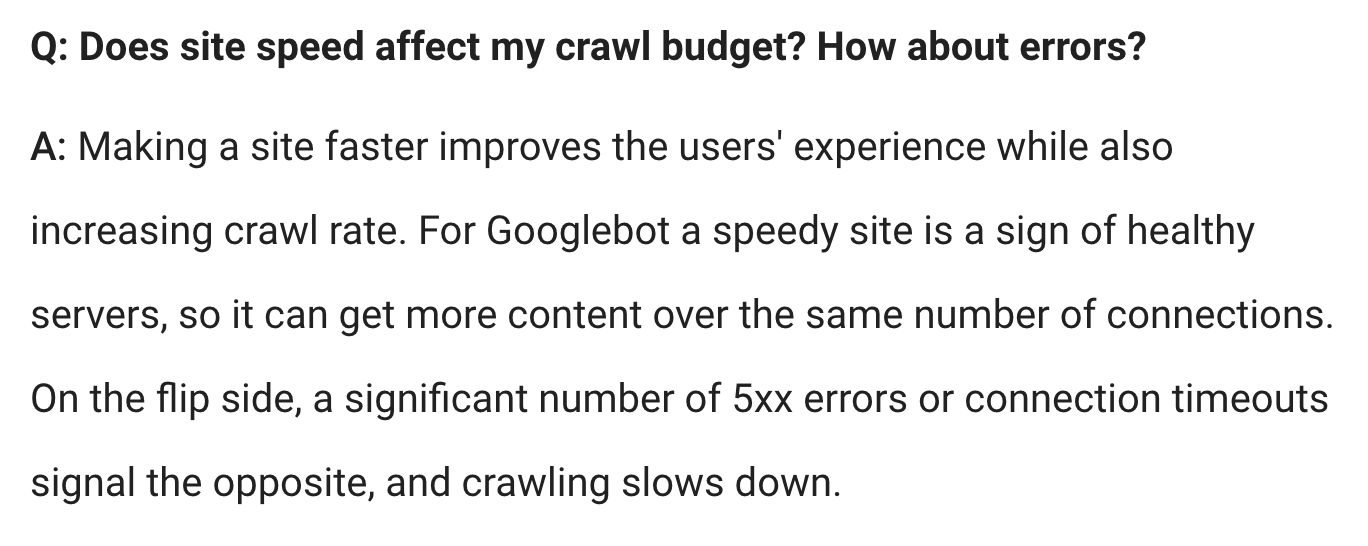

3. Improve your Website speed

Speed is an important ranking factor, a great usability factor and a factor that affects the crawl budget.

To say it simply, when a website loads fast the Googlebot can crawl more pages of the same site in less time. This is a sign of a healthy website infrastructure and an encouragement to crawlers to get more content form the particular site.

This is what Google mentions about site speed and crawl budget.

As a webmaster, your job is to make every effort to ensure that your webpages load as fast as possible on all devices.

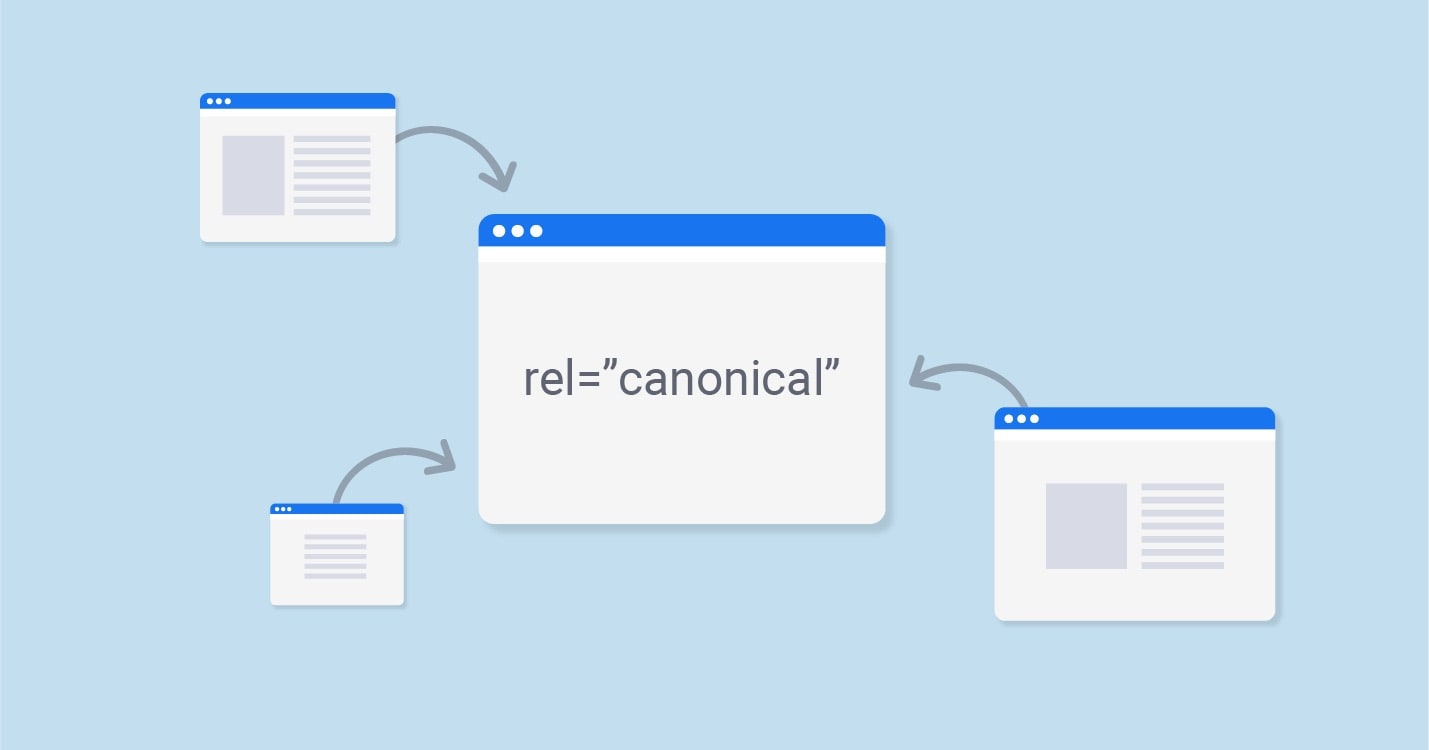

4. Solve Duplicate content issues

One of the factors that can negatively impact crawl budget is on-site duplicate content.

Duplicate content in this context is identical or very similar content appearing in more than one URL on your site.

This is a very common issue in eCommerce category pages where similar products are listed in more than one category.

Besides eCommerce sites, blogs can have issues with duplicate content. For example, if you have a number of pages targeting the same keywords, and the content on those pages is similar, then Google may consider this as a duplicate content.

How does duplicate content impacts crawl budget?

It makes the job of Googlebot more difficult because it has to decide which of the pages to index.

Crawling resources gets wasted on pages that Google will eventually mark as a duplicate content.

Pages that are more valuable to the site may not get indexed because the crawl rate limit might have been reached crawling and indexing duplicate content pages.

How to solve duplicate content issues?

The best way to solve duplicate content issues is to:

- Use canonical URLs to specify the preferred URL for each and every page on your site.

- Use robots.txt and the noindex directive to block search engine bots from accessing and indexing duplicate content pages.

- Optimize your XML sitemap to specify to help search engines identify which pages from a site they should give priority.

5. Get rid of thin content

Similar to duplicate content, another factor that can impact crawl budget is thin content pages.

Thin content is pages on your site that have little or no content and add no value to the user. They are also referred to as low-quality pages or low-value pages.

Examples are pages that have no text content, empty pages or old published pages that are no longer relevant to both search engines and users.

To optimize your crawl budget you should find and fix thin content pages by:

- Removing them

- Enhancing their content to add value to users and republish them

- Block them from search engines (noindex them)

- Redirect them to a different but more valuable page on your site

By doing any of the above actions, crawling time will be allocated on pages that are important for your site.

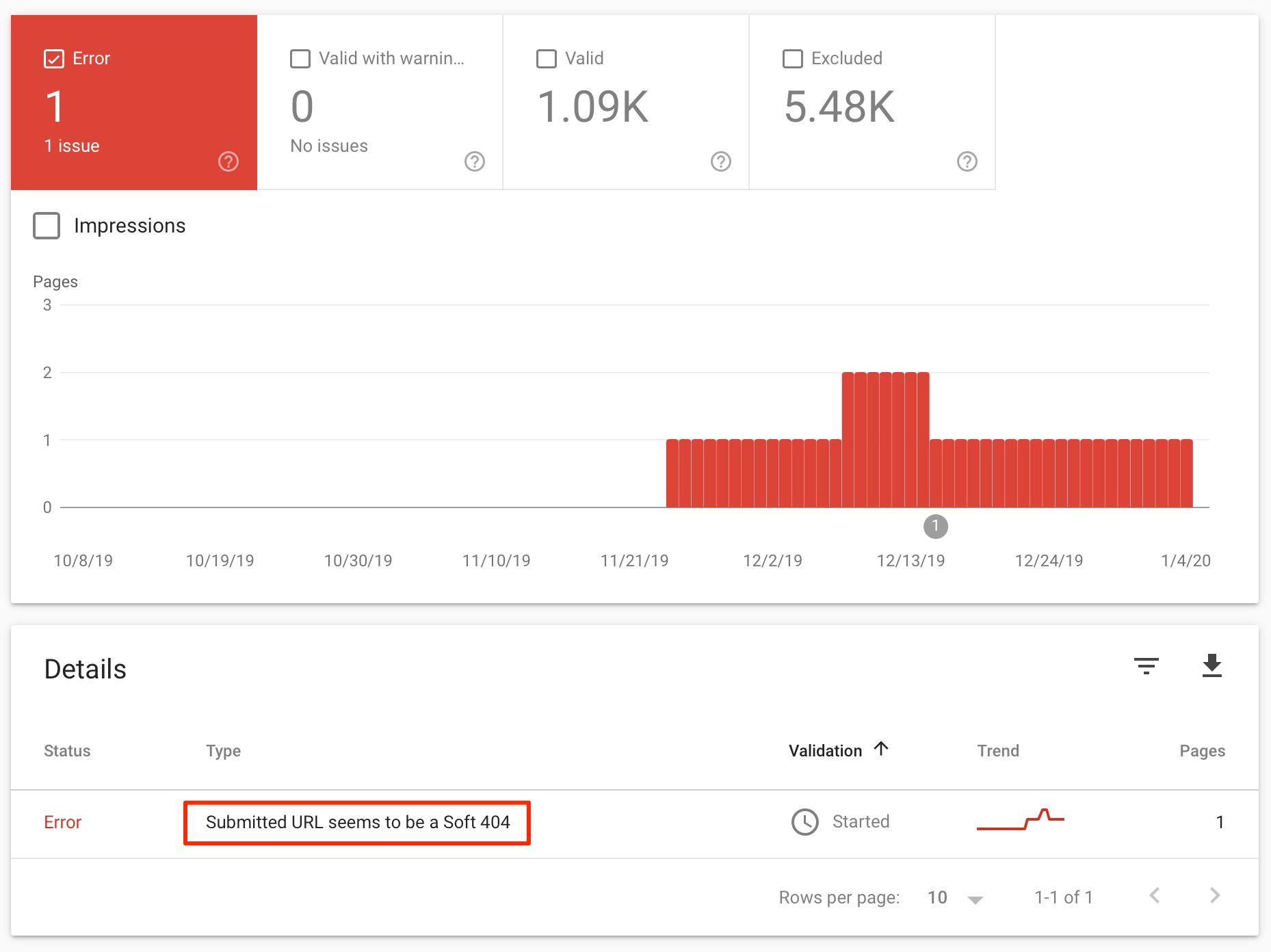

6. Fix Soft 404 errors

Soft 404 errors can happen for many reasons and it’s not always easy to find out the exact reason.

The most common is the misconfiguration of your HTTP server, slow loading websites and having a lot of thin content pages on your site.

The problem with soft 404 errors (in comparison to normal 404 errors), is that soft 404 errors waste your crawl budget because search engine crawlers keep these pages in their index and try to recrawl them.

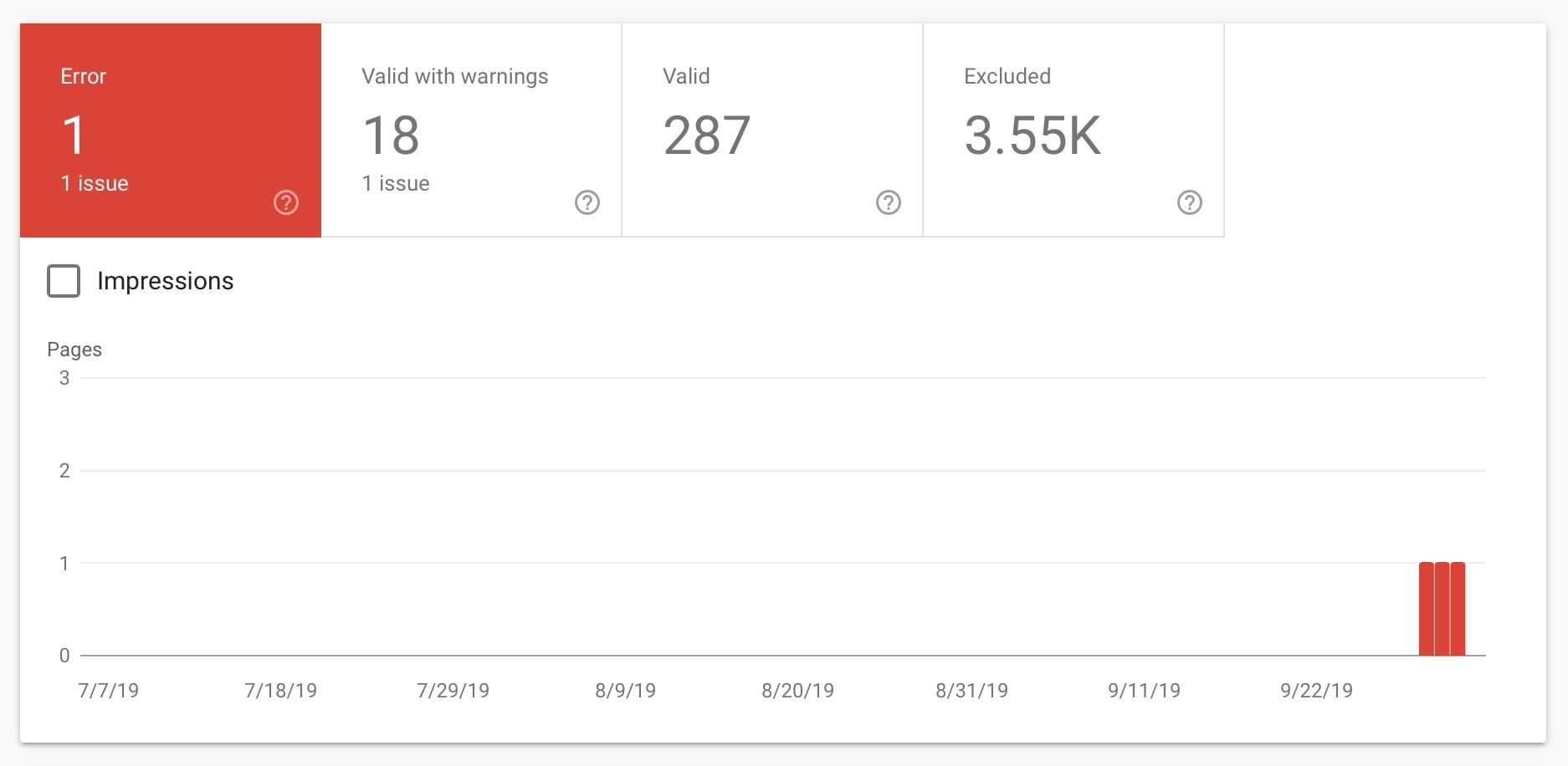

The best way to deal with soft 404 errors and optimize your crawl budget is to log in to Google search console and view the Coverage error report.

Click on “Submitted URL seems to be a Soft 404” to view the list of affected pages and fix them.

How to find and fix soft 404 errors – a step-by-step guide on how to identify soft 404s and possible ways to fix them.

7. Fix Crawl errors

Another way to increase your crawl budget is to reduce the number of crawl errors. Crawling time spend on errors that shouldn’t exist in the first place is wasted time.

The easiest way to do this is to use the Google search console “Index Coverage Report”, to find and fix crawl errors.

Our comprehensive guide “How to fix crawl errors in Google search console” has all the information you need.

8. Avoid having too many redirects

Another issue that may slow down how often Google crawls a website is the presence of too many redirects.

Redirects are a great way to solve duplicate content issues and soft 404 errors, but care should be taken not to create redirect chains.

When the Googlebot finds a 301 redirect, it may not crawl the redirected URL immediately but will add to the list of URLs to crawl from the particular site. If a URL is redirected to a URL and that URL is redirected to a new URL then this complicates the process and slows down crawling.

Check your .htaccess and make sure that you don’t have any unnecessary redirects and that any 301 redirects are pointing to the final destination only (avoid intermediate destinations for the same URL).

9. Make sure that you have no hacked pages

A website that is hacked has a lot more things to worry about than crawl budget, but you should know how hacked pages affect crawl budget.

If your website is hacked for some time without you knowing about it, this will result in reducing your crawl budget considerably. Google will lose the trust of the site and index it less often.

To avoid this unpleasant situation, you can make use of a security service to monitor your website and check regularly the “Security Issues” report of Google search console (located under Security and Manual actions).

10. Improve your website’s reputation (External links)

Popular URLs tend to be crawled more often by search engines because they want to keep their content fresh in their index.

In the SEO world, the biggest factor that differentiates popular pages from the least popular pages is the number and type of backlinks.

Backlinks help in establishing trust with search engines, improve a page’s PageRank and authority and this eventually results in higher rankings.

It’s one of the fundamental SEO concepts that hasn’t changed for years.

So, having pages with links from other websites will encourage search engines to visit these pages more often resulting in an increase of crawl budget.

Getting links from other websites is not easy, in fact, it’s one of the most difficult aspects of SEO but doing so will make your domain stronger and improve your overall SEO.

How to Check and Interpret your Crawl Stats Report?

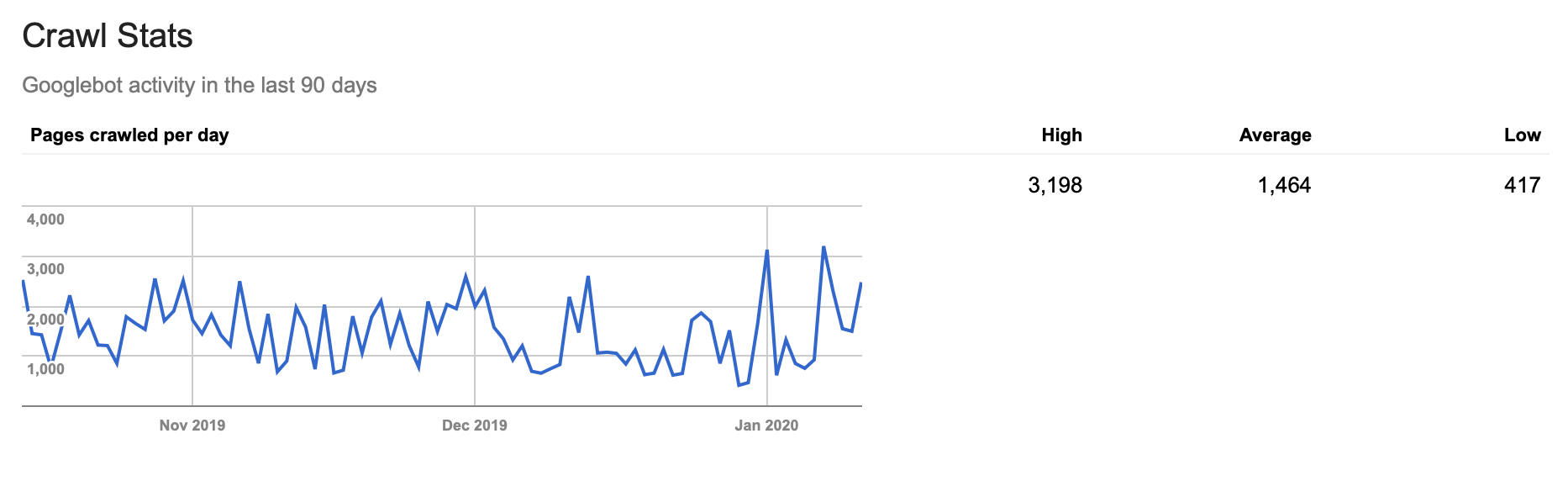

Although you should not get over obsessed about crawling budgets and crawl stats, it’s good from time to time to review the “Crawl Stats” report in Google search console and look for any abnormal behavior.

The Crawl Stats report is currently available in the old version of the Google search console. To find it you need to login to your Google search console account and then select CRAWL STATS under “Legacy Tools and Reports”.

What this report shows is information about ALL Googlebot activity on your site for the last 90 days.

The report will include any attempt made by Googlebot to access any crawlable asset on your sites such as pages, posts, images, CSS files, js files, pdf emails and anything else that you have uploaded on your server.

That’s also the reason why the number of “Pages crawled per day” is bigger than the number of pages you have in Google index.

What to look for in the Crawl stats report?

When viewing the report try to spot any sudden drops or spikes in the number of pages crawled by day. Look for a period of two weeks or a month and see if the drop or spike is continuous.

Under normal circumstances, the number of crawl pages should steadily increase over time (provided that you add new content on the site on a regular basis). If you are not making any changes, then the pattern should be similar if you compare two time periods.

A sudden drop in crawl rate can occur when:

- You added a rule to block a big part of your pages from being indexed by search engines

- Your website and server are slower than usually

- You have a lot of server errors that need your attention

- Your website is hacked

A crawl rate can spike when:

- You added a bunch of new content on the site

- Your content went rival and you got new links which increased your domain authority

- Spammers added code to your site that generates hundreds of new pages

You can visit the link below to learn more about the data shown in the crawl stats report but in the majority of cases, this is not something you should worry too much about.

Crawl Stats report – A guide from Google on how to correctly interpret the data in the crawl stats report.

Key Learnings

Optimizing your crawl budget for SEO is the same process as optimizing your website for technical SEO. Anything you can make to improve your website’s usability and accessibility is good for your crawl budget, is good for users and it’s good for SEO.

Nevertheless, every little step helps SEO and when it comes to crawl budget optimization the most important step is to get rid of crawling and indexing errors. These errors waste your crawling budget and fixing them will contribute to your website’s overall health.

The other factors like website speed, duplicate content and external links, can improve site visibility in search engines and this means higher rankings and more organic traffic.

Finally, it’s a good practice to take a lot at your crawl stats report from time to time to spot and investigate any sudden drop or spike in crawl rate.

Thanks for your useful information. I’ve never known about Crawl Budget and its significance until reading this article. Your 10 tips to optimize the crawl budget for SEO are also really clear. I’ve taken some steps such as improving the site speed or providing in-depth content. These are not enough and I think I need to do more than that.